Accelerating iOS on QEMU with hardware virtualization (KVM)

*

Preface

While QEMU started out as a platform for emulating hardware (especially architectures not supported by the host CPU), later versions gained the ability to execute code using hardware-assisted virtualization. This can yield substantial performance benefits, since most executed opcodes are performed directly by the CPU, instead of being translated into a number of native opcodes that simulate the behavior of the original one.

Using virtualization requires a host CPU that supports the executing architecture. On an Intel-based machine (such as those we used for developing our iOS on QEMU project), virtualizing an architecture other than x86/x64 would be impossible. Therefore, when running iOS (an arm64 OS) on QEMU, we’d be using regular emulation. At first, the performance was more than adequate. But as our efforts to execute iOS on QEMU developed, and more parts of the operating system were brought up successfully, we started noticing degraded performance.

Modern ARM chips support hardware-assisted virtualization, similar to their x86 bretheren. If we were to run our version of QEMU on an ARM-based system, it should be possible to harness the virtualization capabilites of the underlying CPU in order to achieve near-native performance. This post documents the challenges we had to overcome in order to successfully boot our iOS system on QEMU using hardware-assisted virtualization.

Choosing a hardware platform

When moving away from an Intel-based laptop to an ARM-based system for development, the first question was, which platform to choose. Should we use an ARM server in the cloud? An ARM-based development board? A developer-friendly Android phone? Each of the options seemed to have its advantages.

An ARM server would easily be the most powerful of the bunch - but the selection of ARM-based dedicated servers is limited, they aren’t cheap, and we weren’t sure we’d get the level of access we’d need (our assumption was that recompiling the kernel would be required). Buying a physical server is an option we entertained for a short while, but with prices in the thousands of dollars, the idea was quickly discarded.

A used Android phone was another good candidate, but even developer-friendly phones might be difficult to work with. Using Android instead of a general-purpose Linux could be a limiting factor - and finding a phone with enough RAM to execute iOS in parallel with a full-blown Android environment would require us to go with a recent phone, which would not be cheap.

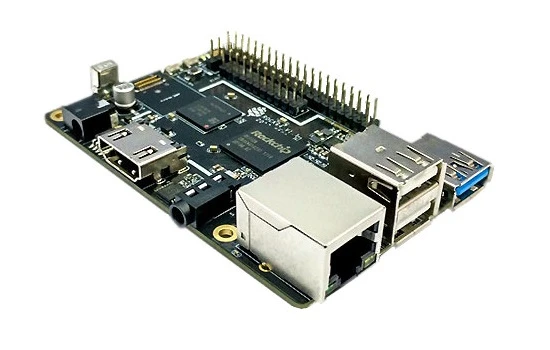

An ARM-based development board looked like a good choice, that would let us test and develop our code at a fraction of the price of the alternatives. Looking for good aftermarket support for kernel development, 64-bit ARM SOCs, and no less than 4GB of RAM, we chose Pine Rock64 as our testbed:

The board comes with an arm64 CPU (Cortex-A53-based RK3328), as well as 4GB of RAM. The choice seemed perfect for our purpose: when emulating an iPhone, we successfully used Cortex-A53 as our CPU, and while 4GB of RAM wasn’t ideal (we’d usually run our emulation with 6GB of RAM dedicated to QEMU), our tests indicated that using less RAM (for example, 2GB) had no significant impact at this point.

Virtualizing iOS on Rock64

Enabling KVM in QEMU

Once our board was up and running the latest version of Armbian, it was time

for our first attempt to run QEMU while using hardware-assisted virtualization

of the CPU in place of emulation. In theory, one simply has to add the

-enable-kvm switch to the command line…

Unfortunately, it wasn’t that easy. While QEMU launched successfully, iOS

wouldn’t boot. Attaching gdb on boot let us see the instructions we executing

correctly at first, but upon continuing, we’d quickly find ourselves in an

infinite loop located at 0xfffffff0070a0200. Based on the kernel symbols, it

was one of the vectors for interrupt/exception handling. Reaching that code

meant an exception occured early on, and the operating system still hasn’t

reached a point where it could be handled more gracefully (such as dumping

registers and memory content, combined with at least some sort of the issue

description). We had no choice but to step through the early initialization

of the kernel, one instruction after the other, until the jump to the exception

vector occured.

Enabling the MMU

At this point, it’s important to note, that when the kernel is loaded for the

first time, the MMU isn’t enabled yet, and the code is mapped to physical

addresses (the execution begins at 0x470a5098). It is only later during the

initialization that the MMU is enabled (once the page tables are initialized),

and the addresses switch to the familiar kernel mode (with higher bits set to

1). When looking at the kernel image in a disassembler such as Ghidra, however,

all code is mapped to kernel addresses. Therefore, the initialization code we

were inspecting could be found at 0xfffffff0070a5098.

Some of the initialization code contains loops that execute many times. In

order to make following the execution more effective, we used breakpoints that

we set at small intervals. This let us continue the execution, instead of

stepping through each instruction and loop. Using this technique, we quickly

found out the exception occured at the address 0x470a72e4:

0x470a72d4 msr vbar_el1, x0

0x470a72d8 movz x0, 0x593d

0x470a72dc movz x1, 0x3454, lsl 16

0x470a72e0 orr x0, x0, x1

0x470a72e4 ==> msr sctlr_el1, x0

0x470a72e8 isb

0x470a72ec movz x0, 0

0x470a72f0 msr tpidr_el1, x0

As we can see, at the mentioned address, the value 0x3454593d is written into

the SCTLR_EL1 register. As per ARM DDI 0487, D13.2.113, this is the system

control register, that provides a top level control of the system at EL0 and

EL1. Its first bit is used to enable address translation via the MMU. Since the

exception happens when executing this instruction (and bit 0 is set in the new

value for the register), the address translation configuration was an immediate

suspect.

There are several registers used to configure the MMU - namely, TCR_EL1,

TTBR1_EL1, and MAIR_EL1. We inspected the values stored in those registers

prior to enabling the MMU. One field that stood out was TCR_EL1.TG1, that

indicates the granule size for the TTBR1_EL1. The initialization code of our

iOS kernel sets the value of TCR_EL1.TG1 to 0b01 (the field is stored in

bits 31:30, and the value written to TCR_EL1 at 0x470a7244 is

0x000000226519a519). The value of 0b01 corresponds to a granule size of

16KB.

Notably, after stepping through the msr instruction at 0x470a7244,

inspecting the value of TCR_EL1 revealed a slightly different value of

0x00000022a519a519 - the granule size was read out as 0b10 (4KB)! This made

the reason for an exception upon enabling the MMU clear: while the page tables

set up by the initalization code were designed with a 16KB granule size in

mind, the actual granule size stored in TCR_EL1 when enabling the MMU was set

to 4KB. The MMU treated the page entries incorrectly, and a page fault occured

immediately upon enabling the MMU. But why would the value we attempted to set,

that of a 16KB granule, wouldn’t stick?

ARM DDI has the following to say about setting the value of TCR_EL1.TG:

If the value is programmed to either a reserved value, or a size that has not been implemented, then the hardware will treat the field as if it has been programmed to an IMPLEMENTATION DEFINED choice of the sizes that has been implemented for all purposes other than the value read back from this register.

It is IMPLEMENTATION DEFINED whether the value read back is the value programmed or the value that corresponds to the size chosen.

In our case, TCR_EL1.TG is read back as 0b10 after attempting to set it to

0b01. This indicates that in our case, the read back value is one that

corresponds to the granule size chosen (since it’s not the value we

programmed), and that the 16KB size has not been implemented in our CPU. We can

verify this assumption with the help of the ID_AA64MMFR0_EL1 register

(AArch64 Memory Model Feature Register 0). Its field TGran16 (at bits 23:20)

is used to indicate support for 16KB memory translation granule size: when the

bits are all set to 0, the granule size is not supported. Reading the value

of the register on our Rock64 developer board returns the value of

0x00001122 - thus, bits 23:20 are set to zero, and our CPU doesn’t implement

the 16KB granule size. In fact, by referring to section 4.2.1 in ARM DDI 0500

(ARM Cortex-A53 MPCore Processor Technical Reference Manual), we can see that

the value of 0x00001122 is there by design - i.e., Cortex-A53 cores do not

implement the 16KB granule size. Interestingly, the Cortex-A53 implementation

in QEMU ignores this, and implements the 16KB granule size (the value of

ID_AA64MMFR0_EL1 is 0x00001122, matching the reference manual, but setting

TCR_EL1.TG to 0b01 works as intended).

We had several options for the continuation of the project. We briefly considered patching the iOS kernel to use 4KB or 64KB pages, both supported by Cortex-A53. This idea was quickly dropped, as it requires a lot of effort, while its probability of success is questionable. While building the initial page table with different page sizes should be doable, we’d have to find all the places in the kernel code that manipulate the page table, and update them accordingly - not an easy feat to accomplish.

We, therefore, had to switch to an ARM core that supports 16KB pages. This brought us back to the choice of a hardware (ARM server, development board, or a phone), with one more requirement to fullfil: we’d look up the technical reference manual of the core powering the chosen hardware, and verify the granule size used by the iOS kernel is supported.

Unfortunately, most budget development boards use older ARM cores (either Cortex-A53 or Cortex-A72), with no support for the required granule size. We found one board with a core that had the required support - but it only had 1GB of RAM. Most Android phones woulnd’t fit our bill either - we’d need a modern phone with a recent chipset, and all the prior challenges of working with a phone would still exist.

We chose to switch to a cloud-based ARM server for initial development. Upon a successful proof-of-concept, with all the potential kinks in the kernel ironed out, we’d consider getting a more expensive development board or a phone. Our first attempt was with Amazon AWS, but we quickly found out their Graviton cores don’t work with 16KB pages (the upcoming Graviton 2 cores are based on ARM Neoverse N1, with the required support - but they weren’t available at the time). We ended up working with Packet, that has 2 different ARM offerings, one based on Cavium ThunderX CN8890 and the other on Ampere eMAG 8180, at competitive prices.

We began working with the Cavium-based server, and successfully passed the

initial point of failure at 0x470a72e4 (the MMU was enabled successfully, and

the execution continued to the following instruction without exceptions).

Apple-specific registers

Our joy was short-lived. Just several instructions later, at 0x470a72f4, we

encountered another instruction that failed and dropped us into the exception

handler (based on the value of the status register, we hit an invalid

instruction):

0x470a72ec movz x0, 0

0x470a72f0 msr tpidr_el1, x0

0x470a72f4 ==> mrs x12, s3_0_c15_c4_0

0x470a72f8 orr x12, x12, 0x800

0x470a72fc orr x12, x12, 0x100000000000

This cryptically looking mrs instruction attempts to read the value of a

special-purpose register. While many special registers have defined names (and

are displayed accordingly in disassemblers - all the aforementioned registers,

like SCTLR_EL1, are examples of such special registers) - some are not

defined by ARM, and are specific to an implementation. Access to those

registers is encoded with several fields (op0, op1, CRm, CRn, and op2) - the

combination of which is unique for each system register.

In our case, looking up this combination of fields revealed that it is a

special register specific to Apple (it appears as ARM64_REG_HID4 in XNU

sources, defined in pexpert/pexpert/arm64/arm64_common.h). While the purpose

of this register is unclear, we dealt with it (as well as other Apple-specific

registers) in our QEMU code, by simply storing whatever values were written

into those registers, and reading them back as-is. In fact, the code that dealt

with those registers was still present - why wouldn’t it execute, and why were

we experiencing an invalid instruction instead? The root cause is in QEMU’s

implementation. While most of QEMU’s code is indifferent to the underpinnings

of the guest’s CPU (whether it’s emulated in software, or virtualized in

hardware), certain features require different implementations. Handling of

special registers is one such feature.

When KVM is not in use (and the CPU architecture is emulated), the code that

implements the msr and mrs instructions uses callbacks in order to support

a range of special registers (target/arm/translate-a64.c):

/* MRS - move from system register

* MSR (register) - move to system register

* SYS

* SYSL

* These are all essentially the same insn in 'read' and 'write'

* versions, with varying op0 fields.

*/

static void handle_sys(DisasContext *s, uint32_t insn, bool isread,

unsigned int op0, unsigned int op1, unsigned int op2,

unsigned int crn, unsigned int crm, unsigned int rt)

{

const ARMCPRegInfo *ri;

TCGv_i64 tcg_rt;

ri = get_arm_cp_reginfo(s->cp_regs,

ENCODE_AA64_CP_REG(CP_REG_ARM64_SYSREG_CP,

crn, crm, op0, op1, op2));

/* ... */

if (isread) {

if (ri->type & ARM_CP_CONST) {

tcg_gen_movi_i64(tcg_rt, ri->resetvalue);

} else if (ri->readfn) {

TCGv_ptr tmpptr;

tmpptr = tcg_const_ptr(ri);

gen_helper_get_cp_reg64(tcg_rt, cpu_env, tmpptr);

tcg_temp_free_ptr(tmpptr);

} else {

tcg_gen_ld_i64(tcg_rt, cpu_env, ri->fieldoffset);

}

} else {

if (ri->type & ARM_CP_CONST) {

/* If not forbidden by access permissions, treat as WI */

return;

} else if (ri->writefn) {

TCGv_ptr tmpptr;

tmpptr = tcg_const_ptr(ri);

gen_helper_set_cp_reg64(cpu_env, tmpptr, tcg_rt);

tcg_temp_free_ptr(tmpptr);

} else {

tcg_gen_st_i64(tcg_rt, cpu_env, ri->fieldoffset);

}

}

/* ... */

}

The cp_regs of the DisasContext struct contains descriptors of various

coprocessor (special) registers for the emulated CPU. A descriptor of type

ARMCPRegInfo can be retrieved by using the op0, op1, CRm, CRn, and op2

fields, that uniqeuly identify the register. The descriptor struct may contain

callback functions for access control (accessfn, not shown in the snippet),

writing (writefn), and reading (readfn) of the register. While all the

standard special registers are pre-defined by the various core implementations

in QEMU, adding support for new special registers during emulation is as simple

as writing a couple of callback functions, and adding the new descriptors to

the cp_regs field of the CPU.

With KVM, the implementation differs considerably - but prior to diving into it, we need a basic understanding of virtualization and hypervisors.

Virtualization 101

The idea behind hardware-assisted virtualization is quite simple: we want to be

able to execute code directly on the host CPU, but to sandbox it from accessing

resources that do not belong to it. A good example would be kernel code. It

is, in theory, ommnipotent - it can reconfigure sensitive registers, and has

full access to all available memory: how can we avoid conflicts between two

kernels executing in parallel? The issue is solved by limiting the priveleges

of code execuing in a virtual environment on the CPU. Basically, certain

instructions, considered sensitive in their nature (such as instructions that

can configure important system registers), interrupt the normal execution of

the virtualized code, and control is returned to the host, where the software

responsible for the virtual machine (the hypervisor) can inspect the

instruction, and emulate its effects without affecting the host. These jumps

to the host are commonly called VM exits. And when code is executed in this

virtualizaion mode, it is said that it runs on a virtual CPU. Not all VM exits

are necessary (and the virtual CPU can be configured to either raise those

exits, or handle them internally), and some aspects of the virtual CPU

behavior have to be set up correctly (such as memory translation). The

hypervisor is responsible for all of the above.

In the QEMU/KVM world, KVM takes upon itself the low-level responsibilities of a hypervisor: it configures the virtual CPUs, schedules execution of code on those CPUs, and takes initial care of various VM exits as they happen. When an exit happens that KVM cannot handle on its own (such as communication with an emulated device, that KVM is not aware of), KVM transfers the handling up to QEMU. The reason behind keeping those transfers to QEMU to a minimum is performance: VM exits are already pretty expensive operations (the state of the virtual CPU and its registers must be saved and later restored, and the emulation of the instruction that caused the exit can be a lengthy process in its own right). Adding a transfer from KVM (kernel mode) to QEMU (user mode) slows down the execution ever further.

Special registers under KVM

So how does QEMU handle special registers, when KVM is enabled?. With hardware virtualization in use, the instructions are executed directly on the CPU. When a special register, unknown to the host processor (as is the case with Apple-specific registers, when running on a non-Apple CPU), is accessed, an exception is raised, and a VM exit occurs. The hypervisor has to handle the access prior to resuming the guest’s execution. How should KVM handle this?

Let’s start with examining the handle_exit function in

arch/arm64/kvm/handle_exit.c, that’s responsible for handling VM exits:

int handle_exit(struct kvm_vcpu *vcpu, struct kvm_run *run,

int exception_index)

{

/* ... */

exception_index = ARM_EXCEPTION_CODE(exception_index);

switch (exception_index) {

/* ... */

case ARM_EXCEPTION_TRAP:

return handle_trap_exceptions(vcpu, run);

/* ... */

}

}

When an exit occurs, KVM checks the CPU’s status register in order to find out

what cause the exit. When it determines the exception was caused by a trap (as

is the case with access to unsupported special registers), it calls the

handle_trap_exceptions function:

static int handle_trap_exceptions(struct kvm_vcpu *vcpu, struct kvm_run *run)

{

int handled;

/*

* See ARM ARM B1.14.1: "Hyp traps on instructions

* that fail their condition code check"

*/

if (!kvm_condition_valid(vcpu)) {

kvm_skip_instr(vcpu, kvm_vcpu_trap_il_is32bit(vcpu));

handled = 1;

} else {

exit_handle_fn exit_handler;

exit_handler = kvm_get_exit_handler(vcpu);

handled = exit_handler(vcpu, run);

}

return handled;

}

This function, in turn, retrieves a handler function and executes it. The

handler function is retrieved via kvm_get_exit_handler:

static exit_handle_fn kvm_get_exit_handler(struct kvm_vcpu *vcpu)

{

u32 hsr = kvm_vcpu_get_hsr(vcpu);

u8 hsr_ec = ESR_ELx_EC(hsr);

return arm_exit_handlers[hsr_ec];

}

When the trap occurs due to an unsupported special register, the returned

handler is kvm_handle_sys_reg, from arch/arm64/kvm/sys_regs.c:

int kvm_handle_sys_reg(struct kvm_vcpu *vcpu, struct kvm_run *run)

{

struct sys_reg_params params;

unsigned long esr = kvm_vcpu_get_hsr(vcpu);

int Rt = kvm_vcpu_sys_get_rt(vcpu);

int ret;

trace_kvm_handle_sys_reg(esr);

params.is_aarch32 = false;

params.is_32bit = false;

params.Op0 = (esr >> 20) & 3;

params.Op1 = (esr >> 14) & 0x7;

params.CRn = (esr >> 10) & 0xf;

params.CRm = (esr >> 1) & 0xf;

params.Op2 = (esr >> 17) & 0x7;

params.regval = vcpu_get_reg(vcpu, Rt);

params.is_write = !(esr & 1);

ret = emulate_sys_reg(vcpu, ¶ms);

if (!params.is_write)

vcpu_set_reg(vcpu, Rt, params.regval);

return ret;

}

That looks promising - KVM seems to be emulating the system registers! Looking

at the source of emulate_sys_reg exposes the details:

static int emulate_sys_reg(struct kvm_vcpu *vcpu,

struct sys_reg_params *params)

{

size_t num;

const struct sys_reg_desc *table, *r;

table = get_target_table(vcpu->arch.target, true, &num);

/* Search target-specific then generic table. */

r = find_reg(params, table, num);

if (!r)

r = find_reg(params, sys_reg_descs, ARRAY_SIZE(sys_reg_descs));

if (likely(r)) {

perform_access(vcpu, params, r);

} else if (is_imp_def_sys_reg(params)) {

kvm_inject_undefined(vcpu);

} else {

print_sys_reg_msg(params,

"Unsupported guest sys_reg access at: %lx [%08lx]\n",

*vcpu_pc(vcpu), *vcpu_cpsr(vcpu));

kvm_inject_undefined(vcpu);

}

return 1;

}

We see that KVM attempts to find a descriptor for the special register, and

calls the relevant callback function, if found (in perform_access). If it

isn’t found, however, a fault is injected into the guest (i.e., the guest will

received an undefined instruction exception once execution is resumed). So,

the logic looks similar to that of QEMU - however, the descriptor tables are

not the same. In fact, KVM can emulate a range of special registers that are

well defined in the ARM documentation - but no support for custom,

implementation-defined registers is provided. There is no interface that lets

a user-mode application (such as QEMU) to modify the descriptor table and thus,

introduce new registers. In fact, the CPU initialization code in QEMU (with

KVM enabled), behaves accordingly: it ignores all the special register

descriptors previously defined, and instead works with the list of supported

special registers, exposed by KVM. Our code for handling Apple-specific

registers would never run under KVM, and we were hitting an undefined

instruction early in our kernel initialization code.

We considered several options (and, in fact, implemented all of them, eventually).

Replacing access to Apple-specific registers with NOPs

This was the quick-and-dirty solution - it only required combing through the

kernel code to find all the msr and mrs instructions that accessed

Apple-specific registers. Since our callbacks under emulation simply stored

the values written into those registers for later access, we knew their

functionality was not critical for booting the kernel.

We did, however, run into a couple of places where NOPs wouldn’t work. Those

were spots where the register value was read prior to initalization. Remember,

that the mrs insruction reads the value of the special register into a

general-purpose register. When the register access was emulated, the value

written into the general-purpose register would be 0 (and it seemed to work).

However, when the instruction was replaced with NOP, the general-purpose

register would remain unchanged, holding its previous value - which could

change the flow of the execution. To handle those cases correctly, we chose

to replace reads from Apple-specific registers with zeroing of the destination

general-purpose register instead.

The downside of the solution was its specialization: it worked on our specific version of the kernel, and would require us to search for use of Apple-specific registers anew for every additional kernel we were to execute on our system.

Emulating Apple-specific registers with a kernel module

Linux kernel modules provide a simple, yet powerful infrastructure for running arbitrary code inside the kernel. We wanted to use a kernel module to hook the exit handler in KVM, and execute our code under certain conditions (namely, when the exit occured due to access to an Apple-specific register). This proved possible, but the solution wasn’t as elegant as we’d hoped.

Most of KVM’s internal code is marked static, making it impossible to link

against. Instead, those static functions had to be found dynamically by the

kernel module. Furthermore, all the relevant tables (both the descriptors of

the supported special registers, and the trap handlers) were stored in

read-only memory. In order to set up our own handler in place of

kvm_handle_sys_reg, we had to make the handlers table writeable first:

int disable_protection(uint64_t addr) {

/* Manually disable write-protection of the relevant page.

Taken from:

https://stackoverflow.com/questions/45216054/arm64-linux-memory-write-protection-wont-disable

*/

pgd_t *pgd;

pte_t *ptep, pte;

pud_t *pud;

pmd_t *pmd;

pgd = pgd_offset((struct mm_struct*)kallsyms_lookup_name("init_mm"),

(addr));

if (pgd_none(*pgd) || pgd_bad(*pgd))

goto out;

printk(KERN_NOTICE "Valid pgd 0x%px\n",pgd);

pud = pud_offset(pgd, addr);

if (pud_none(*pud) || pud_bad(*pud))

goto out;

printk(KERN_NOTICE "Valid pud 0x%px\n",pud);

pmd = pmd_offset(pud, addr);

if (pmd_none(*pmd) || pmd_bad(*pmd))

goto out;

printk(KERN_NOTICE "Valid pmd 0x%px\n",pmd);

ptep = pte_offset_map(pmd, addr);

if (!ptep)

goto out;

pte = *ptep;

printk(KERN_INFO "PTE before 0x%lx\n", *(unsigned long*) &pte);

printk(KERN_INFO "Setting PTE write\n");

pte = pte_mkwrite(pte);

*ptep = clear_pte_bit(pte, __pgprot((_AT(pteval_t, 1) << 7)));

printk(KERN_INFO "PTE after 0x%lx\n", *(unsigned long*) &pte);

flush_tlb_all();

printk(KERN_INFO "PTE nach flush 0x%lx\n", *(unsigned long*) &pte);

return 0;

out:

return 1;

}

Another downside of this approach (in addition to the aforementioned lack of elegancy), was code duplication: we already had the code for handling Apple-specific registers in QEMU, and it was a shame we had to rewrite it in the kernel module. The problem would become more serious, if we were to make the logic of handling those registers more complicated in the future.

Nonetheless, this approach still has its merits: it’s superior to manually patching the unsupported instructions in the iOS kernel, and provides a quick and easy development environment compared to modifying the kernel itself. The code for our kernel module can be found here.

Adding support for special register handling in QEMU

The final approach consisted of making the required modifications both to KVM and QEMU, introducing the option to delegate the handling of unknown registers to QEMU.

We introduced a new exit reason (exit reasons are used when KVM transfers

control back to QEMU, if the handling of the VM exit cannot be completed

in the kernel), that notifies QEMU of an attempt to access an unsupported

special register (KVM_EXIT_ARM_IDSR). The structure that contains information

about the exit was modified to include the data about the attempted access,

including the identification of the special register in question.

In turn, QEMU code was modified to be aware of the new exit reason, and handle it appropriately. The new handler queries the list of custom special registers defined during the initialization of the CPU, and if the list contains the register that caused the exit - the matching handler is called. Otherwise, KVM is notified that QEMU failed to handle the access, and an undefined instruction fault is injected into the guest, providing unmodified behavior when unsupported registers are encountered.

The Linux kernel with our patches can be found here (it’s based on the mainline Linux kernel v5.6rc-1), while the relevant QEMU modifications are already available in the master branch in our repo.

Floating-point instructions

Once we fixed the issue with Apple-specific registers (at the time, via simple patching of the iOS kernel), we ran into a yet another unhandled exception. This time, the instruction in question was using a floating-point register.

In ARM architecture, access to floating-point and vector (FP/SIMD) registers

and instructions is governed by the FPEN field of the CPACR_EL1)

(Architectural Feature Access Control) register. When the field is set to

0b11, FP/SIMD registers and instructions are accessible. Otherwise, when an

FP/SIMD register is accessed, a trap occurs (and that was the behavior we were

observing).

This trapping behavior was originally used by Apple to transfer control to KPP

(Kernel Patch Protection) - a special piece of code executing at the hypervisor

level, and responsible for continuously monitoring the kernel’s integrity

(More information about using CPACR_EL1 for KPP transfers can be found

here). However,

when initially working on executing iOS under QEMU emulation, we ran into

situations where KPP interfered with certain kernel modifications that we

performed as part of the boot process, and we disbled it. And Since KPP code

was no longer loaded, our initializtion code also set the value of

CPACR_EL1.FPEN, to avoid the traps (that would have no handling code).

Under KVM, however, the behavior seemed inconsistent with the value of

CPACR_EL1.FPEN that was set, and our initial response was to verify it while

debugging the kernel. It was set to the expected value at the beginning of the

kernel’s execution. Furthermore, querying the value at runtime, right before

executing the instruction causing the trap, returned the correct value as well.

Baffled by the issue, we dug into the details of QEMU’s implementation, and

found out that the value of CPACR_EL1 written during initalization, is not

forwarded to the virtual CPU (instead, it is only stored in the QEMU struct

describing the CPU). Not only that, but when the value is qeuried through

the debugger, QEMU’s gdb stub does not retrieve the value from the virtual CPU,

instead trusting the value stored in the QEMU struct). Thus, while for all

intents and purposes, it looked like the correct value was set, the actual

value in the virtual CPU was one that disabled FP/SIMD registers and

instructions.

Once we understood the problem, the solution was simple: patching the kernel

to set the correct value of CPACR_EL1.FPEN at the beginning of the execution.

Zeroing out memory regions

At that point, we already saw a single line of output on the screen (the Darwin banner), but the iOS kernel refused to boot further, once again getting stuck with an unhandled exception. We had to continue our debugging efforts - and, as before, comparing our execution step-by-step between QEMU with KVM and QEMU using regular emulation helped immensly.

When running under KVM, breakpoints at virtual addresses, set prior to

enabling the MMU, don’t work once the MMU is actually turned on. We, therefore,

had to set a breakpoint at a physical address after the MMU was enabled (e.g.,

at 0x470a7e8), and once it hit - we were able to set further breakpoints at

virtual addresses, that functioned without issues.

As we followed through the execution of the kernel, we noticed some

discrepancies: at certain points in execution, certain functions resulted in

different values in some of the registers. In most cases, the difference could

be attributed to entropy - the values would differ not only between executions

with and without KVM, but also between different executions with the same

technology. In fact, we were probably looking at the initialization of ASLR in

the kernel. However, upon further examination, we finally noticed a difference

that was specific to executions under KVM: at 0xfffffff0071ba04c, after

calling bzero, the value of the zeroed out region was different. While with

emulation, the region was modified as expected (192 bytes were zeroed out, as

per the argument), under KVM several bytes before the passed address were set

to 0, as well.

As it turns out, bzero (a function that’s used to zero out memory buffers)

has an optimized path. It checks the size of the buffer, and if it’s bigger

than a certain threshold (0x80), it uses the special dc zva opcode to zero

out chunks of the buffer (of size 0x40). dc zva is an opcode that zeroes out

a region of memory pointed by its single register argument. It ignores a

certain number of LSBs in the argument, and thus zeroes out a region bigger

than a single byte. But the number of ignored bits is implementation-defined.

Since the iOS kernel is compiled for a specifc ARM SoC, the number of ignored

bits is known ahead of time, and the code is built with that number in mind

(in this case, a buffer of 0x40 bytes is expected to be zeroed out - meaning,

6 LSBs are ignored).

The ARM servers we were working on, however, ignored a higher different number

of LSBs. Therefore, when dc zva was called inside bzero, more bytes than

expected were set to 0, and we got an unexpected behavior. The solution to this

problem was simple: we patched the flow of bzero to avoid the optimized path

that uses dc zva, instead forcing it to the path that uses regular stp

opcodes to zero out the memory. Removing the condition from the branch at

0xfffffff0070996d8 was the only required change. While a bit slower, this

ensured that the execution of the bzero was consistent across different CPUs.

Interrupt handling

While the system continued to boot, it’d get stuck eventually, since our code depends on the presence of the clock interrupt. With emulation, the interrupt we set up is delivered just fine, but with the default options of KVM, raising interrupts is delegated to the interrupt controller, and stays out of QEMU’s hands.

The iOS kernel, however, expects the clock interrupt to be preconfigured and routed to a certain pin on the CPU. Since the virtualized interrupt controller is not pre-configured in this manner, code that depends on the clock interrupts wasn’t being called at all.

Configuring the interrupt controller is a complex task, and should be performed

from the iOS kernel itself (which means writing a custom driver for the

controller). We found an easy alternative: KVM/QEMU supports using an emulated

interrupt controller - it’s just not the default option. By adding the

kernel-irqchip=off argument to our machine’s options, we were able to force

it to use the emulated interrupt controller exposed by QEMU, and code that

depends on the clock interrupts began to work.

Known issues

At this point, the system was working for the most part, but certain problems still occured. In this section, we’ll discuss the issues that we discovered, but chose not to address due to the prohibitive cost of fixing them fundamentally. We hope to introduce alternative approaches that will bring back the functionality affected by these issues.

MMIO granularity

With the interrupt handling corrected, the next problem we ran into involved MMIO, and our fake task port. The port is used to enable user-mode code to inject arbitrary code into kernel space, and the idea is based on mapping the virtual memory of the real port as an MMIO. This gives us control over the data returned to the kernel whenever the port is accessed. Furthermore, upon first access, we can make a copy of the port at a different address - and the MMIO access operation can serve as a handy trigger that the real task port for the kernel is ready. The kernel, however, kept crashing upon access to the address of the task port.

The issue was difficult to figure out, since everything seemed to be working correctly in other places where MMIO is used (such as the UART port). Eventually we found out that KVM performs MMIO at the granularity of a single page. In other words, if a region is marked for MMIO, it will be page-aligned, regardless of the intended size.

In our case, we wanted to mark a single pointer (64 bits) for MMIO, and leave the rest of the memory as is. However, due to the MMIO granularity of KVM, all the data around the task port pointer would be routed to MMIO, as well - but that information wasn’t available (our MMIO code for the task port was programmed to handle access to the task port pointer only).

Emulating a whole page of data over MMIO was out of scope for this PoC (and would probably impact the performance considerably, assuming that page was frequently accessed). We chose to disable the fake task port functionality for now.

Implementation-defined registers and user-space

At this point, our iOS system was booting correctly. However, a single issue remained: the TCP tunnel (detailed in the previous post), wasn’t working anymore. It turns out that access to implementation-defined registers (such as the one we were using for communication between the guest OS and the host system) is not allowed at EL0, as per ARM documentation. And so, despite our efforts to introduce support for custom handling of implementation-defined registers under KVM, the TCP tunnel (that is a user-space program, running at EL0), is currently unable to run correctly.

In the future, we hope to move the functionality of TCP tunnel (and guest services, in general), into the kernel. That will alleviate the issue, and tunnelling connections to and from the system running under KVM will be possible.